AI does not just write, film, or dub. It blitzes through the full content stack at what is basically lightspeed. The tech is not the bottleneck anymore: trust is. Audiences, platforms, and an ever-likelier regulatory swarm all want to know what is real, what is AI-generated, and exactly who had their digital fingers on the file. For content marketers and automation teams, this is not philosophy. It is an engineering problem at the foundation of your pipeline. Ready or not, authenticity has become a product feature, and your stack needs a trust layer just like you need a CRM or a reliable automation engine. If you are scaling content automation, this is now baseline. See the latest policy and pipeline guidance in Provenance Pipelines Supercharge AI Marketing and our playbooks for credential-native workflows in Build Credential Native Content Pipelines.

The punchline? Authenticity is both an ops discipline and a risk strategy. Platforms are auto-labeling synthetic media, ad policies are tightening, and content credentials are shifting from nice to have into required for distribution. Teams that wire provenance checks directly into their automation workflows ship faster and suffer fewer costly review holds. Ignore it, and you will end up knee-deep in appeals, demonetized posts, and unwanted legal games.

The New Reality Check for Automated Content

What was hard about automated content last year? Generation speed.

What is hard today? Governing trust at scale. On platforms, in the eyes of regulators, and for your audience. There are three tidal changes every builder and marketer has to surf:

- Platform mandates for disclosure: Major platforms now require explicit labels for anything that blends realistic synthetic media. Miss it and you risk throttling, demonetization, or takedowns. These labels are increasingly prominent for sensitive, high-stakes topics.

- End-to-end transparency policies in ads: Ad networks are rolling out stricter rules, especially in categories like health, finance, and politics. Fuzzy definitions and close enough will not cut it.

- Open standards going mainstream: Content Credentials and C2PA-backed tooling have moved from pilot to practice across creative suites and devices. Anyone can embed tamper-evident, portable provenance data without custom development. See our deeper guide in Build Your AI Content Provenance Layer Fast.

Here is some good news: retrofitting your stack is doable, and it is cheaper than constantly firefighting review holdups. Authenticity is just another data layer. Track it, budget it, and log it at every stage.

Provenance Is Not One Thing. Let’s Map It

If your provenance strategy is just add a watermark, you are already two steps behind. Here are the five core techniques. Combine them for the deepest coverage:

| Technique | What it does | Where it helps | Weak spots | Automation hooks |

|---|---|---|---|---|

| Platform disclosure labels | Flags assets as AI-modified or fully synthetic for viewers | Meets platform and ad policies | Can be bypassed or forgotten if not enforced programmatically | Auto-tag on upload; block unflagged assets at publish step |

| Content credentials | Embeds signed, machine-verifiable edit history and provenance into files | Cross-tool, cross-platform authenticity; fits enterprise stacks | Some tools still strip metadata; privacy/PII risk if not managed | Stamp on export, verify at every ingest and before publish |

| Invisible watermarks | Imprints AI-origin indicators within pixels or audio | Detects generative origin when metadata is lost; survives light edits | Can degrade from compression or heavy post-process; not always proof | Apply at render, track detection stats in QA passes |

| Hashing & manifests | Links assets to cryptographic hashes/manifests stored separately | Reliable audit trail and tamper detection over versions/releases | Strict controls needed; not self-describing for end users | Auto-log to content DB; pin hashes to assets on each version |

| Human attestations | Captures documented consent for likeness/music/data use | Critical for faces, voices, sensitive personal data | Painful if not digitally managed and linked; often handled as paper or loose files | E-sign at intake; store attestation references in manifest |

From Vibes to Receipts. Design the Authenticity Pipeline

Gorgeous content. Boring back office. That is the secret to reliable, scalable provenance. This is where no-code, low-code, and app stack teams win only if their trust layer is as smooth as their generation engine.

1. Make Policies Explicit and Programmable

Hardcode disclosure logic. Slides and Slack pings are not a control system. Program your orchestration to enforce. See this reference policy snippet:

{

"auth_policy": {

"disclose_on": ["ai_voice", "ai_face", "ai_video_edit", "ai_photo_realistic"],

"sensitive_topics": ["health", "finance", "elections"],

"require_prominent_label": true,

"credentials": {

"enabled": true,

"issuer": "brand_signing_service",

"include": ["tool_list", "edit_ops", "claim_sources"],

"redact": ["pii", "geo_exact"]

},

"watermark": {"image": true, "video": true, "audio": true},

"attestations": {"likeness": "required_if_present", "music_license": "required_if_present"}

}

}

2. Stamp on Export, Verify on Import

Add provenance at the end of every creative step and verify on entry to the next. Failure on either should halt and escalate. Machines, not humans, should be your first line of defense.

{

"jobs": {

"render_video": {

"steps": [

"generate_script",

"synthesize_voice",

"edit_video",

"render",

"stamp_credentials",

"apply_watermark",

"write_manifest"

]

},

"publish_youtube": {

"preflight": [

"verify_credentials",

"check_disclosure_needed",

"enforce_label",

"attach_attestations"

],

"on_fail": "send_to_editor_review"

}

}

}

3. Automate Disclosure Routing

No more “did we label this?” guessing games. Build disclosure into your router with clear, testable rules.

{

"disclosure_router": {

"inputs": {"ops": ["ai_voice", "ai_face"], "topic": "finance", "realism": 0.92},

"rules": [

{"if": "ops.length > 0", "then": "label_required"},

{"if": "topic in sensitive_topics", "then": "prominent_label"},

{"if": "realism > 0.8", "then": "label_required"}

],

"output": {"label": "synthetic_media", "prominent": true}

}

}

4. Receipt Logging for Everything

When the business or compliance asks, you do not want to be the team that is pretty sure you are compliant. Keep a receipt for every finished asset.

{

"receipt": {

"asset_id": "vid_20451",

"hash": "sha256:2fa...",

"credentials": {"issuer": "brand_signing_service", "verified": true},

"disclosure": {"applied": true, "prominent": true, "reason": ["ai_voice", "sensitive_topic"]},

"attestations": {"likeness_id": "att_778", "music_license_id": "mus_332"},

"cost": {"compute_usd": 0.67, "human_minutes": 2},

"approver": "editor_17"

}

}

Platform Shifts: Why This Matters Immediately

Create with AI, distribute with headaches? Not if you integrate correctly. Platforms now require all but the most basic AI edits to be labeled. Suites from creative tools to mobile apps have shipped support for signed content credentials, which makes the technical install far easier than a year ago. For an applied overview and rollout steps, see Build Credential Native Content Pipelines.

Stack-level implication? If your pipeline auto-injects disclosures and credentials, your creatives sail through reviews. If not, you are stuck in appeals with time and ad dollars bleeding out while you fight compliance fires or patch corrupted metadata. Make trust native and stop building workaround after workaround.

Performance Cost and Privacy: The Unskippable Considerations

Provenance is not free, but it is far cheaper than reacting late or patching for every new round of media panic. The smart approach splits trust work three ways:

- On-device/in-editor: Capture watermarks and prep credential data at render time, while data is private and easily auditable.

- Orchestration layer: Add and verify credentials and disclosure just before push-to-live. This is where your disclosure router does its job.

- Archive: Store tight, hashed manifests and receipts only. Enough for audits, not enough to snowball privacy risk.

Bonus: Small and lite models can handle routine checks such as label presence and credential schema, routing only ambiguous edge cases to heavier tools. This controls cost and maximizes speed.

Brand Safety Is a KPI Now

If no one is measuring it, no one is improving it. Here are the metrics automation-first teams adopt for trust pipelines:

- Label coverage rate: Percent of assets needing a label that shipped with one, correct and on time

- Credential integrity rate: Percent of assets with valid, verifiable credentials on first ingest

- Strip rate: Percent of assets whose metadata vanishes downstream. Keep this near zero

- Review hold time: How fast labeled and signed assets publish compared to unlabeled ones

- Appeal or takedown delta: Fewer incidents, faster clears when you keep receipts

- Compliance cost per asset: Total compute and labor. If this climbs year over year, dig in

What To Automate Right Now by Team Size

Creators and Small Teams

- Enable disclosure prompts as default in upload tools for synthetic content

- Adopt export-side credential and hash stamps. Store basic asset manifests in your cloud storage

- Log likeness, music, and data attestations in a single source of truth

- Automate preflight: block publishing if label or credential is missing, notify the designated editor

Mid-market Teams

- Centralize authenticity policy in your main automation and orchestration platform

- Auto-stamp and verify credentials for every variant: image, audio, and video

- Implement a rules-based disclosure router for sensitive topics. Humans only for edge and uncertain cases

- Track and reduce strip rate per channel. Where metadata fails, use overlays or inline labels as fallback

Enterprise & Regulated Organizations

- Treat policy-as-code as the source of truth. Version, approve, and log every policy change

- Partition signing keys and content manifests by region or business unit for data residency

- Set up a monthly regression for provenance: label coverage, credential integrity, and appeals

- Link legal and compliance reviews to pipeline triggers such as missing or unverifiable credentials

Common Failure Modes and How to Fix Them

| Failure Mode | Why it Happens | Fix |

|---|---|---|

| Assets go live without disclosure | Rules live in a wiki or slide, not the pipeline | Enforce via orchestrator, block publish on missing label |

| Metadata gets stripped downstream | Transcoding or export process deletes credentials | Verify on every ingest and publish step, re-stamp or overlay label if lost |

| Excess details leak (privacy risk) | Everything gets logged by default | Redact PII, geo, and internal IDs in policy. Log strictly necessary metadata only |

| Appeals and takedowns drag on | No asset-linked receipts | Keep hashes, signing info, and attestation IDs. Surface with one click |

| Compliance costs spike | Heavy models run every check | Use light models by default. Make escalation evidence-based and capped |

Integrating Authenticity With Your Automation Stack

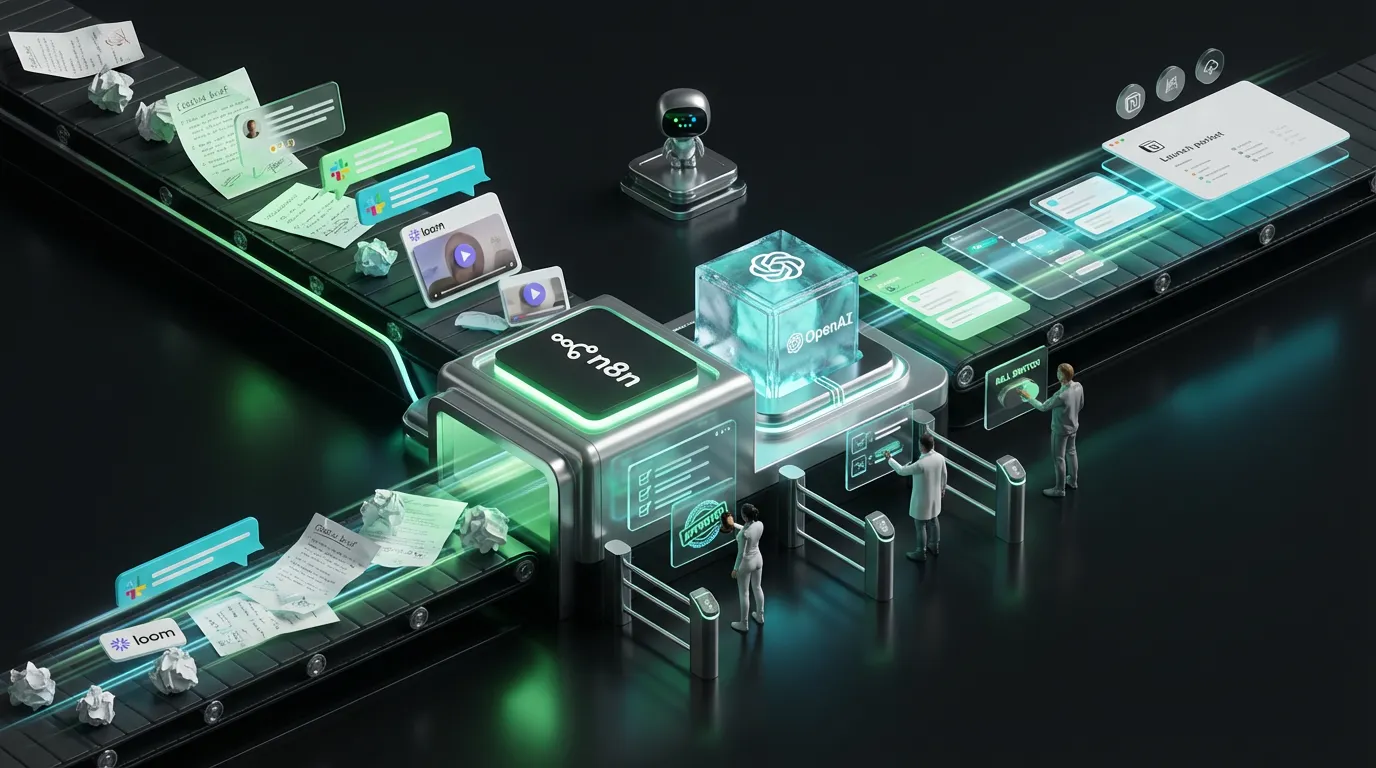

Your existing automation stack already drives content from script to asset to channel. Provenance does not need a separate department. It needs a low-friction trust layer that travels with every asset:

[Inputs]

• product_specs • claims • persona • likeness_docs • music_license

[Create]

• script → voice → edit → render

[Trust]

• watermark → credentials → disclosure router → receipts

[Publish]

• platform_upload → ad_manager → cms

[Observe]

• label_coverage • strip_rate • appeal_delta • cost_per_compliant

Two quick upgrades pay immediate dividends:

1. Deploy a lightweight claims critic. Auto-scan for numeric or regulated claims and block anything without source reference.

2. Add an accessibility check. Ensure captions and alt text are present before assets go live.

Privacy and Trust Are Two Sides of the Same Coin

Authenticity does not need to turn into oversharing. Store the minimum viable data: hashes, signed references, and IDs. Not raw audio, consent forms, or full legal docs. For likeness or voice, keep consent on the CRM or contract side and reference via manifest. If you operate in multiple territories, split keys and manifests by region. Discipline keeps your trust layer low-risk and lightweight.

Build Your Boringly Reliable Trust Layer: A 30-Day Playbook

Week 1: Map Assets and Policy

- Inventory every asset family and delivery path

- Draft your JSON authenticity policy: what triggers disclosure, what needs stamps, and what gets redacted

- Choose stamping and verification tools. Ensure interoperability across your stack

Week 2: Enable Preflight and Receipts

- Implement pre-publish checks: credentials and disclosure are gated before launch

- Auto-log receipts: hashes, signature issuers, label status, and editor approval

- Wire likeness and music attestations so IDs always travel with the asset

Week 3: Pilot in Two Channels

- Roll out in one organic and one paid channel. Keep human review for edge cases or high risk

- Measure metadata strip rate and review delay. Fix tools that drop key data

- Train editors on what these new trust labels mean. Kill the useless bureaucracy narrative with results

Week 4: Scale Up and Add Safeguards

- Expand to all channels, begin weekly reporting on label and credential coverage

- Set cost and compute caps for verification. Steer the budget proactively

- Turn on claims critic and accessibility checks for a last-mile-proof workflow

Before You Hand the Keys to the Bots

- Automation is not abdication. The gnarly decisions still need human eyes

- Agentic workflows can snowball cost. Keep model escalation evidence-based and budgeted

- Hybrid wins: let AI handle scale and grunt work. Reserve taste and judgment for your humans

Competitive Advantage Few Realize

Here is the truth: trust is distribution. In a feed full of AB-tested sameness, rapid appeals, and algorithmic blacklists, the teams that ship receipts by habit get more done, faster. Content with built-in provenance glides through platform checks and legal compliance. You get to market and iterate while others are stuck in reinstatement purgatory. In the boardroom, audit trails replace anxiety with confidence. For your customers, a brand that proves how its assets are made is one that takes transparency seriously. For deeper system design, see The Control Layer for AI Marketers.

Automation-first is not just more output. It is the difference between a brand always on the front foot and a brand always playing catch-up. Making trust boring and repeatable lets your humans focus on the work only they can do.

Do not wait for trust to be imposed on your workflow. Make it your feature. Build a provenance layer that is routine, reliable, and invisible most days. That is the real engine for AI-powered marketing scale in 2026 and beyond.